Install CLI¶

Run HolmesGPT from your terminal as a standalone CLI tool.

Installation Options¶

-

Add our tap:

-

Install HolmesGPT:

-

To upgrade to the latest version:

-

Verify installation:

-

Install pipx

-

Install HolmesGPT:

-

Verify installation:

For development or custom builds:

-

Install Poetry

-

Install HolmesGPT:

-

Verify installation:

Run HolmesGPT using the prebuilt Docker container:

docker run -it --net=host \

-e OPENAI_API_KEY="your-api-key" \

-v ~/.holmes:/root/.holmes \

-v ~/.aws:/root/.aws \

-v ~/.config/gcloud:/root/.config/gcloud \

-v $HOME/.kube/config:/root/.kube/config \

us-central1-docker.pkg.dev/genuine-flight-317411/devel/holmes ask "what pods are unhealthy and why?"

Note: Use

-eflags to pass API keys for your provider (e.g.,-e ANTHROPIC_API_KEY,-e GEMINI_API_KEY). See Environment Variables Reference for the complete list.

Quick Start¶

Choose your AI provider (see all providers for more options).

Which Model to Use

We highly recommend using Sonnet 4.0 or Sonnet 4.5 as they give the best results by far. These models are available from Anthropic, AWS Bedrock, and Google Vertex. View Benchmarks.

-

Set up API key:

-

Create a test pod to investigate:

-

Ask your first question:

Note: You can use any Anthropic model by changing the model name. See Claude Models Overview for available model names.

See Anthropic Configuration for more details.

-

Set up API key:

-

Create a test pod to investigate:

-

Ask your first question:

See OpenAI Configuration for more details.

-

Set up API key:

-

Create a test pod to investigate:

-

Ask your first question:

See Azure OpenAI Configuration for more details.

-

Set up API key:

-

Install boto3:

-

Create a test pod to investigate:

-

Ask your first question:

See AWS Bedrock Configuration for more details.

-

Set up API key:

-

Create a test pod to investigate:

-

Ask your first question:

See Google Gemini Configuration for more details.

-

Set up credentials:

-

Create a test pod to investigate:

-

Ask your first question:

See Google Vertex AI Configuration for more details.

-

Set up API key: No API key required for local Ollama installation.

-

Create a test pod to investigate:

-

Ask your first question:

holmes ask "what is wrong with the user-profile-import pod?" --model="ollama_chat/<your-model-name>"For troubleshooting and advanced options, see Ollama Configuration.

Warning: Ollama can be tricky to configure correctly. We recommend trying HolmesGPT with a hosted model first (like Claude or OpenAI) to ensure everything works before switching to Ollama.

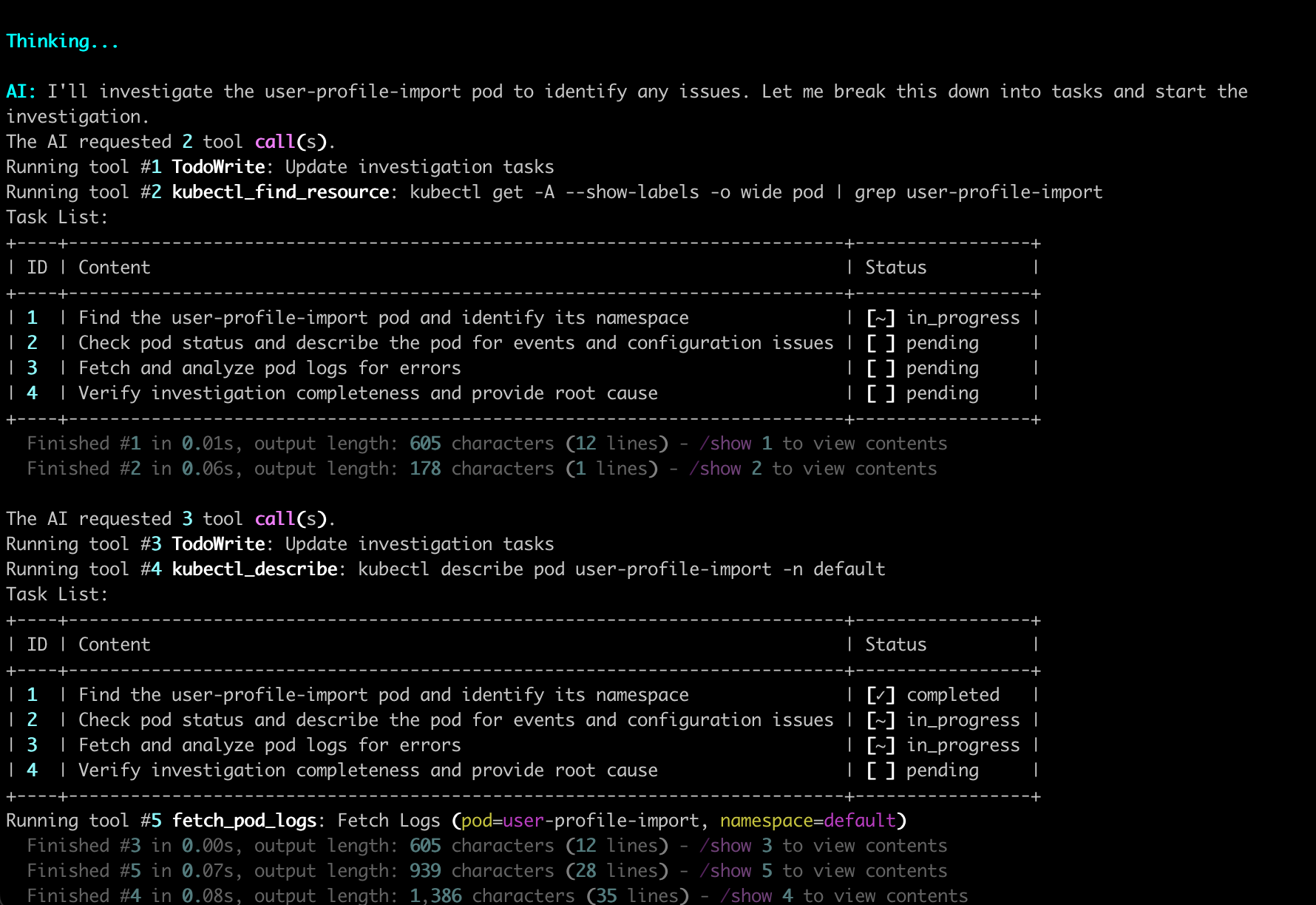

After running the command, HolmesGPT begins its automated investigation, as shown below.

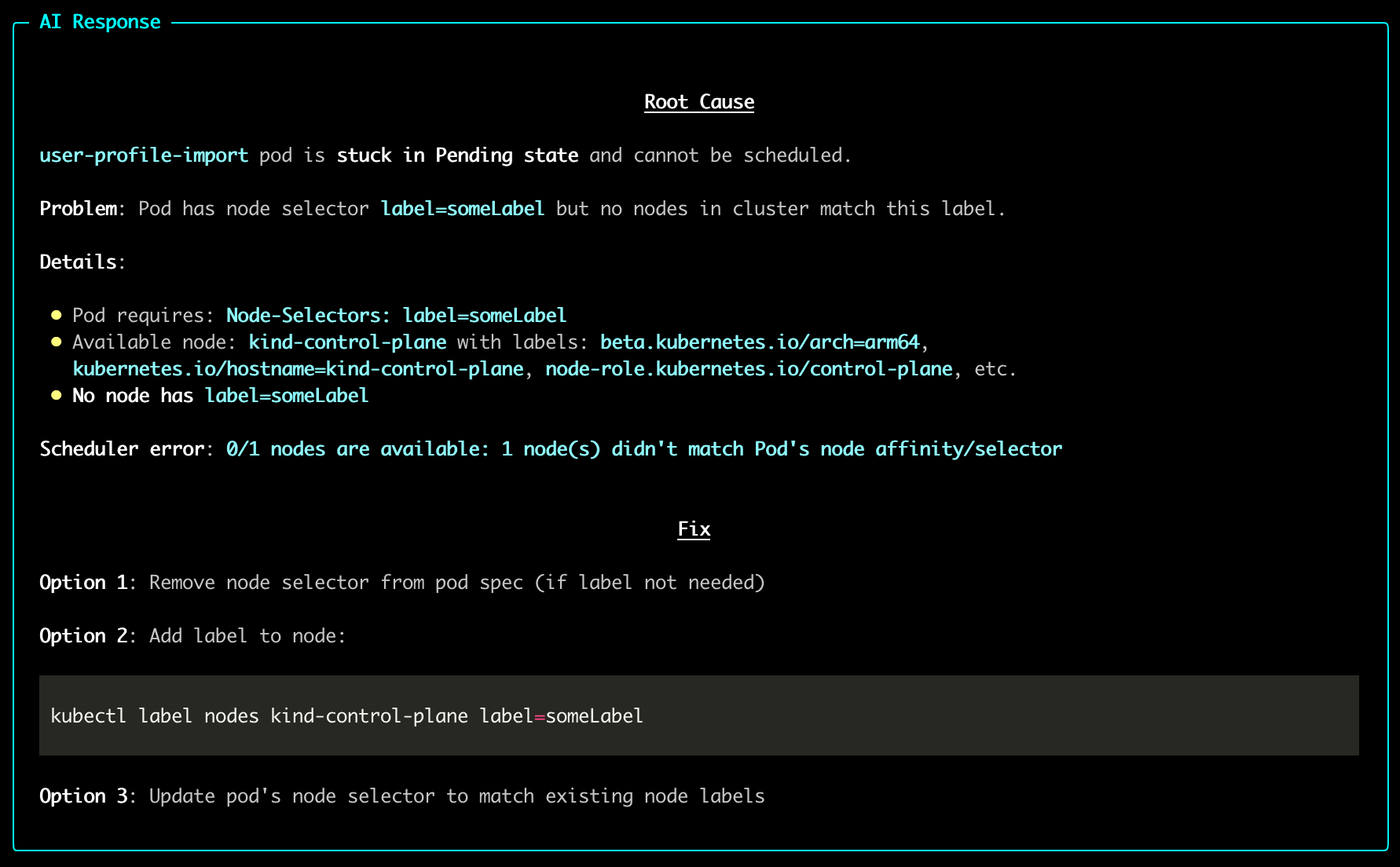

Once the analysis completes, HolmesGPT provides a clear root-cause summary and fix suggestions.

Using Model Lists¶

You can define multiple models in a YAML file and reference them by name in the CLI. This is useful when you have multiple model configurations with different API keys, endpoints, or parameters.

1. Create a model list file:

# model_list.yaml

sonnet:

aws_access_key_id: "your-access-key"

aws_region_name: us-east-1

aws_secret_access_key: "your-secret-key"

model: bedrock/us.anthropic.claude-sonnet-4-5-20250929-v1:0

temperature: 1

thinking:

budget_tokens: 10000

type: enabled

azure-5:

api_base: https://your-resource.openai.azure.com

api_key: "your-api-key"

api_version: 2025-01-01-preview

model: azure/gpt-5

temperature: 0

2. Set the environment variable:

3. Use models by name:

holmes ask "what pods are failing?" --model=sonnet --no-interactive

holmes ask "analyze deployment" --model=azure-5 --no-interactive

When using --model, specify the model name (key) from your YAML file, not the underlying model identifier. All configuration (API keys, endpoints, temperature, etc.) will be automatically loaded from the model list file.

Note: Environment variable substitution is supported using {{ env.VARIABLE_NAME }} syntax in the model list file.

See Environment Variables Reference for more details.

Next Steps¶

- Add Data Sources - We encourage you to use built-in toolsets to connect with AWS, Prometheus, Loki, NewRelic, DataDog, ArgoCD, Confluence, and other monitoring tools.

- Connect MCP Servers - Extend capabilities with external MCP servers.

Need Help?¶

- Join our Slack - Get help from the community

- Request features on GitHub - Suggest improvements or report bugs.

- Troubleshooting guide - Common issues and solutions.